Javascript Frameworks and SEO 101

For many SEOs, I often find that Javascript frameworks are something many haven’t worked with, or don’t know how to fully view and address from a search engine perspective. It doesn’t help that Javascript is linked to coding and developers, which often come with issues for SEOs (if you haven’t experienced the rivalry of developers and SEOs in an in-house environment, you’re lucky). However while it’s a daunting topic, especially if you are not familiar with it, it doesn’t need to be.

It’s important to keep in mind that while how all of this works is rooted in code and is rather technical, you don’t need to know exactly how everything fits together and works. All you need to know is how it works at a high level, what the potential problems are, and how to solve these issues. The actual work that’s required and the implementation is something that the developers can handle. You just need to provide them with a guideline for how to take care of it. Let’s break it down.

Common Frameworks, Terms and how it Works

The most common Javascript frameworks I encounter are Angular and React, with Vue coming in on occasion. Other frameworks also exist, but I don’t see them that often with my clients. At a high level though, the framework itself doesn’t matter for our purposes. What we care about are the SEO implications of a Javascript framework.

The two most important terms you need to be aware of regarding Javascript frameworks are:

- Server-Side Rendering (SSR)

- Client-Side Rendering (CSR)

Server side rendering means that all content is rendered on the server side. The client simply hits the page and the content is all there. They don’t have to do any heavy lifting.

Client side rendering means that all of the content is rendered by the client. This means that the client will do the heavy lifting and process all of the code. The end result is of course the content.

Here’s an example of code from a site that uses Server-Side Rendering

Here’s an example of code from a site that uses Client-Side Rendering.

We’re looking at the source code for both of these sites. Notice how there is more code displayed on the Server-side rendering example? By contrast, the Client-Side rendering site looks like it has very little.

When the user hits the SSR site, the content is ready to go. When the user hits the CSR site, their browser has to load in all of the content and process it.

In general, one approach is not better than the other for users. There are reasons for both and they have their uses. The reason it impacts SEO is because of how Googlebot (and most other Search Engine bots) crawls and processes the site.

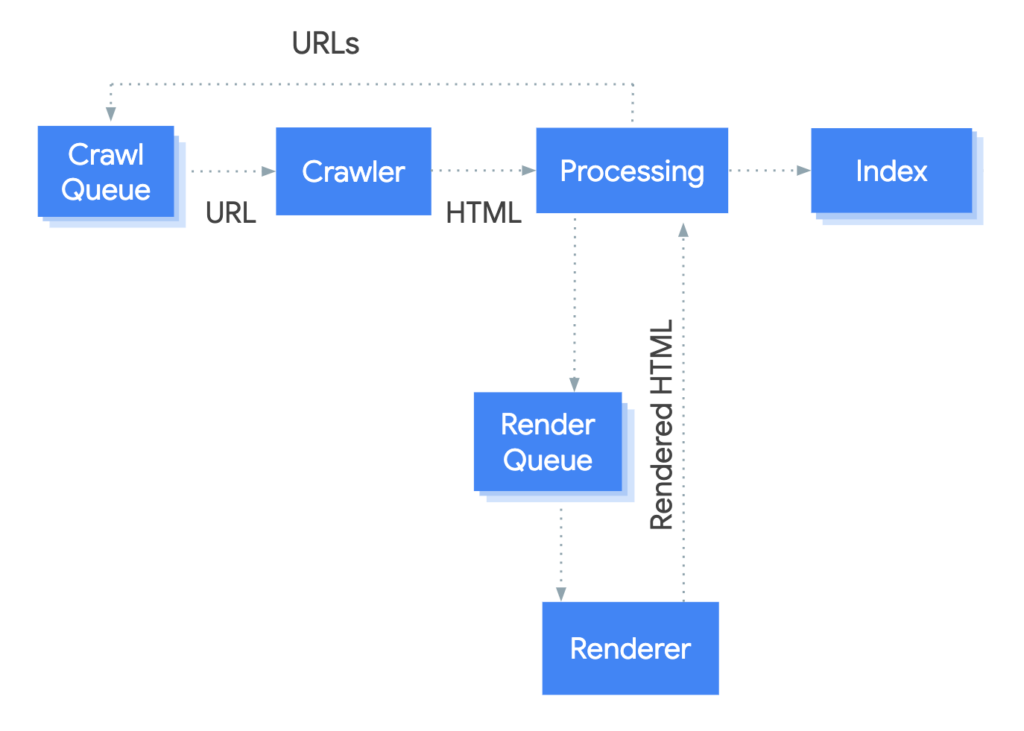

When Googlebot hits a site using CSR, it grabs the initial information and HTML. When Google determines that Javascript needs to be rendered, the site gets placed into a queue for the Web Rendering Service (WRS). Once the Web Render processes the Javascript and renders it, Google will have access to all of the rendered content and factor this into it’s index. Here’s how Google shows it:

Now, I’ve seen Google claim it now renders this Javascript in seconds once it encounters it. I don’t believe that in the slightest, as I’ve seen sites without a dynamic rendering or a prerender service take weeks to get their pages full content to show up to Google. That might change in the future, but I wouldn’t risk relying on Google to process this quickly.

The Problem

There is a period of time between when Google first sees a page and when the WRS renders the page. In that time, all the page gives Google to work with is the baseline code. In the case of a site using CSR, that’s typically the <head>, and there’s no guarantee that the site actually loads important <head> information like meta, title, canonicals etc. into the <head> (I’ve seen it injected by Javascript more times than I would hope for). If the site is using Javascript to load all of the content in the body (see the CSR screenshot above), then all Google see’s until it renders the site is the Javascript and not what it executes. This means that until Google renders the content, it can’t fully identify what your page is about.

At best, you have a new page and it doesn’t really matter if it takes a couple extra days or weeks for the content to show up. At worse, you swapped your entire site over to a Javascript framework with no prerender or dynamic rendering service employed, and now Google see’s a blank slate of content instead of what used to be there. If that’s the case, that time between rendering is going to be a disaster and lead to lost traffic and rankings. I can not stress enough how harmful this can be.

The Solution

Losing your traffic and rankings is a scary prospect. We already deal with enough stress from Google Algo updates and I really don’t think we need any more stress to add to that. However, solving the potential problems that Javascript frameworks can bring isn’t as hard as you might think, and shouldn’t be a source of stress (unless the developers disregard everything you say).

The two terms you need to be familiar with are:

- Prerendering

- Dynamic Rendering

Prerendering is where the content on a CSR site is prerendered for the user. This means that while the site uses CSR, the server can do the heavy lifting of processing all of the code ahead of time, and give the user the already completed page. The browser doesn’t need to handle this on it’s end.

Dynamic rendering is the method used to identify which users should get which content. This means that the server identifies types of users by user-agent and then decides whether to give them content that’s already been prerendered, or let them render the content themselves.

Note: The user-agent is the string that identifies what is visiting the site, allowing servers to determine browser types, bots, etc.

These methods are used in tandem in order to make sure that search engine bots can get the content they need without having to wait and process Javascript.

In order to avoid issues that would result in lost rankings, you need to serve a prerendered version of the page to Googlebot.

Here’s the simple version of how this works.

User A comes to the website. The server knows that User A is using Chrome because of the user-agent. There are no directives setup that say Chrome user-agents should receive prerendered content. The server delivers the regular page, which uses client-side rendering. User A’s browser renders the content.

Googlebot comes to the website. The server knows that Googlebot is Googlebot because the user-agent identifies it as such. The server has a directive that dictates that Googlebot user-agents should receive the prerendered page instead of the client-side rendered version of the page. The server gives Googlebot the prerendered page and Googlebot immediately has access to all of the content on the page. The WRS does not need to be utilized in order to load all of the content.

If dynamic rendering and prerender are not used, Googlebot would get the regular client-side rendered version of the page and would need to wait for the WRS to render the content in order to understand the page.

What do I tell my developers?

Let the developers know that they need to provide a fully rendered page to Googlebot. This means that all of the content that is executed by the Javascript framework must be given in the form of static HTML. If a user who did not enable Javascript visited the page, they should still see all the same content, even if they don’t get the same experience.

Simply put, the solution is to provide a prerendered page to Googlebot and other search engine bots that is delivered through the dynamic rendering method to ensure that the page is fully understood without having to wait for the content to render.

Have them read through Google’s guide on Dynamic Rendering, and they’ll have a better understanding of what needs to be done, and you can rest easy until it’s time to test things out to make sure everything is working smoothly.

Here’s a couple extra things to keep in mind:

- It’s not considered cloaking because the same content is served to bots and users. The method of how the content is processed is all that changed.

- All users do not need prerendered content, mainly bots.

- Because prerendering on the fly can be intensive, the content can be cached. However you should be careful because if the content changes and the cached prerendered content is old, you’ll be missing out on anything new.

- You should use a verification method (https://developers.google.com/search/docs/advanced/crawling/verifying-googlebot) to make sure that only legitimate bots are receiving prerendered content.

Conclusion

That’s a lot to read, but don’t let it overwhelm you! Remember, this will feel daunting at first, but you can work through it. The main issue I see is this whole topic feels out of reach. It’s not and you’re more than capable of tackling it. One time we did not cover is how to test for issues once a framework is implemented. You can head over to our other articles to understand how to tackle that.

Javascript Articles

https://searchmentors.io/troubleshooting-javascript-seo-part-1/

https://searchmentors.io/troubleshooting-javascript-seo-part-2/

https://searchmentors.io/user-agents-and-javascript-files/

Helpful Links

https://developers.google.com/search/docs/guides/dynamic-rendering

https://developers.google.com/search/docs/guides/javascript-seo-basics