Troubleshooting Robots.txt Issues

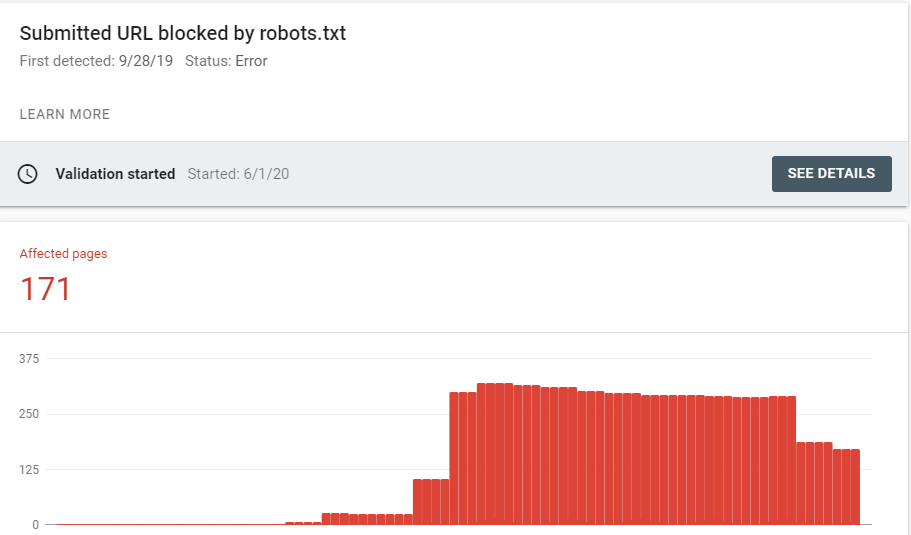

You’re going about your day, reviewing your performance in Search Console, when you decide to check your coverage report. You see a sea of red, and Google’s telling you that you have a bunch of submitted URLs that can’t be indexed because they are blocked by robots.txt.

Now, you know that some URLs should of course be blocked, but you end up finding URLs that shouldn’t be blocked in the list. Where do you go from here?

Potential Issues:

There’s a couple of different areas you’ll want to check to determine if the page is still blocked. Some of these are simple, but other times you’ll need to dig deeper.

I’d summarise the issues as such:

- Robots.txt Change

- Redirects (Middleman) Block

- Server Errors

First Steps

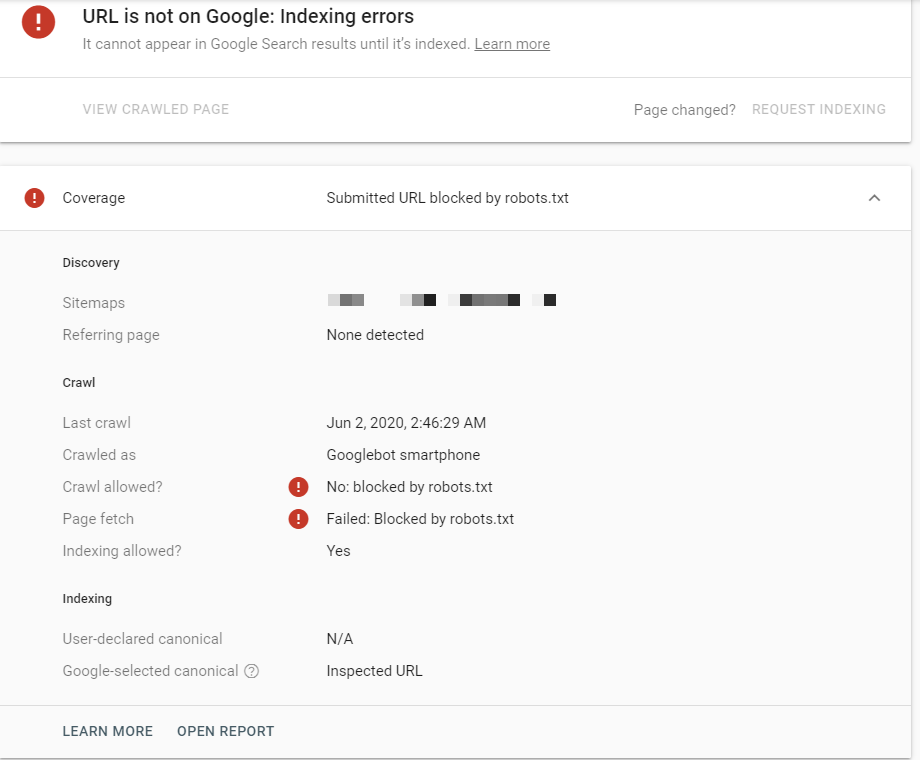

The first step you’ll want to take is to inspect the URL in Google Search Console and see what it says.

You should see something like this. Now, you’ll want to mark down that crawl date because it’s going to come in useful later on when running down the issue.

From here, you’ll want to do a Live Test, since you’re being shown Google’s last crawl (or indexed version if the page was indexed).

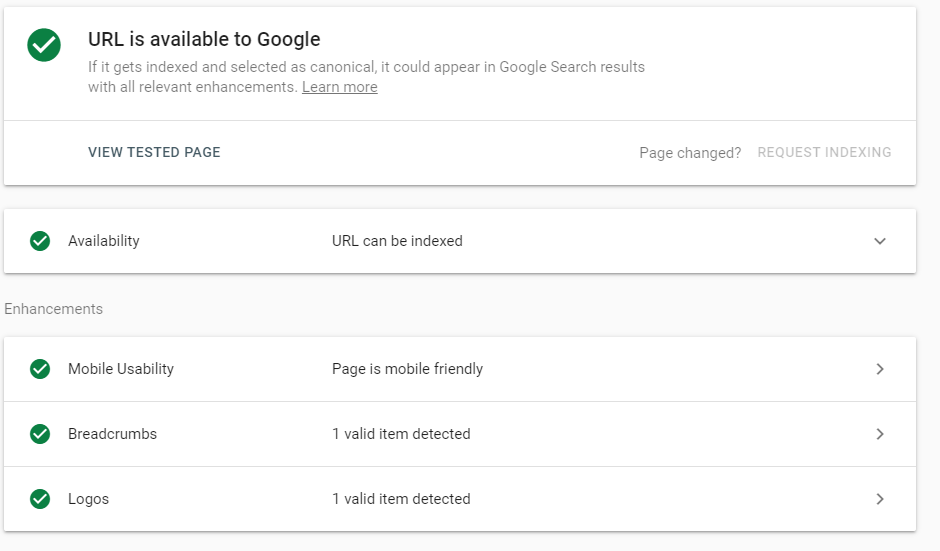

If you see this version of the page, you’re good to go! This means that as of now, Google can crawl the page and it’s no longer blocked. We’ll come back to how you can get Google to recrawl these URLs shortly.

If you however see an exact copy of the first image, then it’s time to diagnose the issue. Even if you see that everything is good to go, it’s still a good idea to check to see what happened so that you can fix a potential issue.

Troubleshooting Step 1: Robots.txt Review

The first place to look is at the robots.txt file located off the root domain (https://www.example.com/robots.txt)

You might see a variety of settings here, but at its most basic you should see this

User-agent: *

Disallow:

This is telling bots that they can crawl everything. You may see slight changes where the User-agent calls out a bot and blocks them from an area of the site, or the entire site etc.

The first thing you want to do is look to see if your URL slug is caught in robots.txt.

As an example, let’s say that the URL https://www.example.com/badbots/index-me/ is a URL that should be indexed, but is coming up blocked.

If you see: Disallow: /badbots/ in the robots.txt, you’ve set the site up to prevent bots from crawling the /badbots/ folder.This is working as intended, and the URL should be blocked. If you want this URL to be indexed, you’ll need to move it out of the /badbots/ subfolder.

We recommend the tool https://technicalseo.com/tools/robots-txt/ when you’re diagnosing robots.txt issues. Throw your current robots.txt in the editor and select your URL and User-Agent. If the URL is allowed, it’ll show a green highlight on the rule that allows it, and if it’s not allowed it’ll highlight that rule with red to show you where the block is occurring.

Solution: Move the URL out of the disallowed folder, or remove the disallow if it is incorrect.

Troubleshooting Step 2A: Robots.txt Change

If you can’t find anything blocking the URL in robots.txt (which will be the case if you were told the URL was fine on a live test), it most likely means that the file was changed for a period of time. Remember when we told you to mark down that last crawl date?

First, ask your developers if they changed the robots.txt file on that date, or right before it. If they did, you’ve solved your problem. It’s possible that it was changed for a moment and Google just happened to crawl at that time, resulting in the blocked URL.

If the devs can’t remember, or you don’t have devs, we’re on to our next step in this process. Now, this may not work, but we’ve had success with it in the past, especially if the site is a well known site.

Head over to http://web.archive.org/ and throw your robots.txt URL into the wayback machine. If it has captures, check the last crawl date and a couple days before hand. If your robots.txt file was updated and it was captured by the Wayback Machine, you’ll be able to identify a change in your robots.txt. To quickly check it, throw it into the robots.txt tester we linked above.

If you did find that the robots.txt was changed, you have a potential issue. We’ve seen cases where it would be changed to disallow the entire site a couple times a month. This could be due to a site setting or it could be due to your developers messing with things. You’ll want to get this fixed.

If you didn’t find your site in the wayback machine then you won’t have proof of this happening from this standpoint, though it’s still a possible outcome.

If you want your sites robots.txt to be crawled by the wayback machine, you can use a script to run a chron job where you submit your URL to be captured daily. This will take a developer, so talk with your devs or reach out to us and we can find a solution for you. Alternatively, we know of tools that monitor for changes as well.

Solution: Prevent changes to robots.txt in the future by requiring all future changes to robots.txt to be verified first. If it’s a site issue, have your devs diagnose the issue and fix it.

Solution 2: Use a tool to alert you to any changes.

Troubleshooting Step 2B: Middleman Block

A middleman block occurs when a URL is redirected through a page that is blocked in robots.txt. This doesn’t come up often, making it difficult to diagnose if you aren’t aware of the issue.

Before we start, make sure you know how to identify redirects. We recommend the plugin Ayima Redirect Path, or alternatively you can use the Network Tab in Chrome Dev Tools if you are familiar with it.

Alright, let’s walk through how this happens:

Example 1:

Https://www.example.com/goodbots/good-url/ is redirected to https://www.example.com/badbots/good-url

What’s going on: Example 1

This case is the easiest to identify, as there isn’t a middleman. If you redirect a URL to a URL that is blocked in the robots.txt file, that URL will now be crawled by Google. This follows the same principle we shared in Step 1: Robots.txt Review.

Solution: Remove the disallow, remove the redirect, or redirect to an allowed URL.

Example 2:

Https://www.example.com/goodbots/good-url/ is redirected to https://www.example.com/badbots/good-url

Then

https://www.example.com/badbots/good-url is then redirected to https://www.example.com/good-url

What’s going on: Example 2

This is the first type of robots.txt block you’ll find that involves a redirect. Consider this to be an Internal Middleman. When Googlebot crawls this URL, it’ll be blocked from accessing the final destination because the redirecting URL is in the blocked folder /badbots/. Google would still be able to access /good-url if it was linked elsewhere, but in this case Google can’t see the final redirect destination.

Solution: Remove the middle redirect and instead redirect directly to the final URL.

Example 3:

Https://www.example.com/goodbots/good-url/ is redirected to https://www.thirdpartydomain.com/check/good-url

Then

https://www.thirdpartydomain.com/check/good-url is then redirected to https://www.example.com/good-url

What’s going on: Example 3

This is rare and you likely wont come into contact with this type of problem, which makes it hard to diagnose if you do. You’d run into this if you used some form of queueing systems on a website, or if you reroute URLs through a third party destination for any other reason.

In this case, you’re seeing that the Goodbots/Good-url is being redirected to a third party domain to perform a check or to hold the user for a short period of time. The third party domain URL is then redirected to the final destination of /good-url. What can happen in this situation however is that the third party domain has a robots.txt that blocks all traffic. This would be considered an external middleman block.

If you want to identify the problem here, you’d need to view the robots.txt of the third party domain and check to see if the block is occurring there. At times the redirect is unnoticable, so you’ll need to pay special attention to the redirects in either the Network panel of Chrome Dev Tools or use Ayimas Redirect Path plugin.

Solution: If you control the third party domain, update the robots.txt to prevent blocking. If you do not control the third party domain then get rid of the service.

Now example 3 may seem far fetched, and we did mention it doesn’t happen often. We ran into this issue with a client who handled sporting events. They used a ticket queue system for one of their biggest events of the year. The result was that Google was not able to crawl that URL and they didn’t rank because the only way to access that URL was through the redirect. The lesson here is to be careful about what you choose to use and always verify that it isn’t impacting crawlers.

Troubleshooting Step 2C: Server Errors (5XX)

The final occurrence that you’ll want to look for involves server errors (5XX status codes). If Googlebot gets a server error, it will act as if robots.txt has been set to Disallow: /. If Google crawls a new URL at the same time as a server error happens, the URL will be considered to be blocked by the robots.txt file.

In order to identify this issue, the easiest method is to ask IT or the developers to check if the server went down on or a day before the date Google listed as it’s last crawl. If they don’t know outright, you can try and rely on the wayback machine like we showed above or get the server logs for the crawl date and check to see if Googlebot was receiving server errors.

Solution: In this case, there’s not always a lot you can do. It’s in your best interest to diagnose the issue, and if it repeatedly happens, look into new server hosting.

Getting Google to recrawl blocked URLs.

Once you’ve discovered the issue and gotten it resolved, it’s time to get Google to crawl these links again. There’s a couple of ways to do this.

Twitter Indexing

Sitemap Indexing

Both of these methods work well. If you can upload a sitemap, use that method as it takes less work. If you don’t have the ability to upload a sitemap and the person who does isn’t going to do it when you want, use the Twitter method.

Twitter Indexing

Twitter Indexing is a method that utilizes twitter to get Google to crawl a URL. The process is simple

Create a twitter account or login to a current account

Tweet out the links you want to be crawled by Google.

Include popular hashtags to ensure Google finds it

Because Google has access to the Twitter Firehose, they’ll find these in record time and recrawl the URLs sooner than if you had just waited.

Sitemap Indexing

Sitemap indexing is a method that utilizes a new sitemap to get Google to crawl a URL. It’s common in migrations to speed up the process and it works here also. The process is simple

Create a new sitemap containing the formerly blocked URLs

Submit the sitemap to Google through Google Search Console

Google will see a new sitemap has submitted and come crawling shortly. You’re all set now.

You might have noticed that Request Indexing through URL Inspection in GSC was not included. That’s because at the time of writing this, you can not request indexing on a URL that was formerly blocked. It’s stupid, so hopefully it gets fixed in the future.

Conclusion

You’ve now learned how to troubleshoot your robots.txt issues! As you can see, sometimes it’s fairly easy, but other times it can be a challenge. Even if your page was indexable when you checked, it’s always good to know what went wrong so that you can prevent that issue in the future.

If you come across an issue that you can’t diagnose with this guide, please reach out to us and we’ll take a look at it for you. Who knows, we might just find another issue to add to this guide.